Increasingly, we’re interacting with our gadgets by talking to them. Old friends like Alexa and Siri are now being joined by automotive assistants like Apple CarPlay and Android Auto, and even apps sensitive to voice biometrics and commands.

But what if the technology itself could be built using voice?

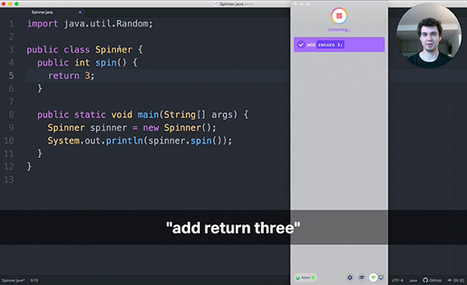

That’s the premise behind voice coding, an approach to developing software using voice instead of a keyboard and mouse to write code. Through voice-coding platforms, programmers utter commands to manipulate code and create custom commands that cater to and automate their workflows.

Voice coding isn’t as simple as it seems, with layers of complex technology behind it.

From Voice to Code: Two of the leading programming-by-speech platforms today offer different approaches to the problem of reciting code to a computer.

One, Serenade, acts a little like a digital assistant—allowing you to describe the commands you’re encoding, without mandating that you necessarily dictate each instruction word-for-word.

Another, Talon, provides more granular control over each line, which also necessitates a slightly more detail-oriented grasp of each task being programmed into the machine. A simple example, below, is a step-by-step guide—in Serenade and in Talon—to generating the Python code needed to print the word “hello” onscreen.

Voice coding is still in its infancy, and its potential to gain widespread adoption depends on how tied software engineers are to the traditional keyboard-and-mouse model of writing code.

But voice coding opens up possibilities, maybe even a future where brain-computer interfaces directly transform what you’re thinking into code—or software itself.

read the original unedited article at https://spectrum.ieee.org/computing/software/programming-by-voice-may-be-the-next-frontier-in-software-development

Your new post is loading...

Your new post is loading...