Your new post is loading...

Your new post is loading...

|

Scooped by

nrip

|

Use a Language Your Company Can Support That in a nutshell is the most important criteria A natural corollary is that if you want to introduce a new programming language to a company, it is your responsibility to convince the business of the benefit of the language. You need to generate organizational support for the language before you go ahead and start using it. This can be difficult, it can be uncomfortable, and you could be told no. But without that organizational support, your new service is dead in the water. read more at https://sookocheff.com/post/engineering-management/the-most-important-criteria-for-choosing-a-programming-language/

|

Scooped by

nrip

|

Python creator Guido van Rossum reveals the strengths and weaknesses of one of the world's most popular programming languages. mobile app development is one of the key growth fields that Python hasn't gained any traction in, despite it dominating in machine learning with libraries like NumPy and Google's TensorFlow, as well as backend services automation. Python isn't exactly boxed into high-end hardware, but that's where it's gravitated to and it's been left out of mobile and the browser, even if it's popular on the backend of these services, he said. Why? Python simply guzzles too much memory and energy from hardware, he said. For similar reasons, he said Python probably doesn't have a future in the browser despite WebAssembly, a standard that is helping make more powerful applications on websites. Mobile app development in Python is a "bit of a sore point", said van Rossum in a recent video Q&A for Microsoft Reactor. "It would be nice if mobile apps could be written in Python. There are actually a few people working on that but CPython has 30 years of history where it's been built for an environment that is a workstation, a desktop or a server and it expects that kind of environment and the users expect that kind of environment," he said. "The people who have managed to cross-compile CPython to run on an Android tablet or even on iOS, they find that it eats up a lot of resources," he said. "Compared to what the mobile operating systems expect, Python is big and slow. It uses a lot of battery charge, so if you're coding in Python you would probably very quickly run down your battery and quickly run out of memory," he said. "Python is a pretty popular language [at the backend]. At Google I worked on projects that were sort of built on Python, although most Google stuff wasn't. At Dropbox, the whole Dropbox server is built on Python. On the other hand, if you look at what runs in the browser, that's the world of JavaScript and unless it translates to JavaScript, you can't run it," van Rossum said. "I don't mind so much different languages have to have different goals i mean nobody is asking Rust when you can write Rust in the browser; at least that wouldn't seem a useful sort of target for Rust either. Python should focus on the application areas where it's good and for the web that's the backend and for scientific data processing." watch the Microsoft Q&A with him at https://www.youtube.com/watch?v=aYbNh3NS7jA read the original article at https://www.zdnet.com/article/python-programming-why-it-hasnt-taken-off-in-the-browser-or-mobile-according-to-its-creator/

|

Scooped by

nrip

|

The author was applying for a program and a task was given to him was to build an ECR20 token in less than 48 hours. This was my first attempt at blockchain development and I didn’t know where to start from. I had knowledge of the cryptocurrency world from a user stand point but not as a developer. I searched around for materials to aid in my task but most were not up to date. This is an up-to-date write up on the steps I took while building this token to help others that are interested in building their own token. Step 1: Contract code Step 2: Create Ethereum wallet with MetaMask Step 3: Get Ropsten Ethers Step 4: Edit the contract code Step 5: Deploy Contract Code on Remix Step 6: Publish and Verify Contract Step 7: Add token to your wallet Congrats!!!

You’ve just created your token. read this excellently written starter at https://www.codementor.io/@vahiwe/building-your-own-ethereum-based-ecr20-token-in-less-than-an-hour-16f44bq67i

|

Scooped by

nrip

|

Whatever business a company may be in, software plays an increasingly vital role, from managing inventory to interfacing with customers. Software developers, as a result, are in greater demand than ever, and that’s driving the push to automate some of the easier tasks that take up their time. Productivity tools like Eclipse and Visual Studio suggest snippets of code that developers can easily drop into their work as they write. These automated features are powered by sophisticated language models that have learned to read and write computer code after absorbing thousands of examples. But like other deep learning models trained on big datasets without explicit instructions, language models designed for code-processing have baked-in vulnerabilities. A new framework built by MIT and IBM researchers finds and fixes weaknesses in automated programming tools that leave them open to attack. It’s part of a broader effort to harness artificial intelligence to make automated programming tools smarter and more secure.

|

Scooped by

nrip

|

It’s still tough to opt out of Apple and Google’s ecosystems. But some app makers are coming around to the web’s upsides the web-first approach is one that some developers have been rediscovering as discontent with Apple’s and Google’s app stores boils over. Launching with a mobile app just isn’t as essential as it used to be, and according to some developers, it may not be necessary at all. There are new examples emerging which demonstrate how developers are now building Web apps smartly for the mobile and skipping listing in App Stores, demonstrating what a modern, fast web app can do, There’s just one problem with this zeal for web apps: On iOS, Apple doesn’t support several progressive web app features that developers say are necessary to build web apps that offer all the power and usability of a native app. iOS web apps, for instance, can’t deliver notifications, and if you install them on the home screen, they don’t support background audio playback. They also don’t integrate with the Share function in iOS and won’t appear in iOS 14’s App Library section. Android, by contrast, supports most of those features, and even allows websites to include an “Install App” button. Read the original article at https://www.fastcompany.com/90623905/ios-web-apps to see some of the examples which are discussed. Of those 2 of them I personally tried out, liked and started using after reading the article i.e. 1Feed and Wormhole

|

Scooped by

nrip

|

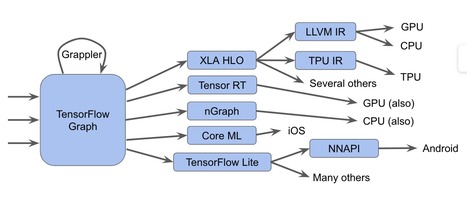

Can the Raspberry Pi 400 board be used for Machine Learning? The answer is, yes!

TensorFlow Lite on Raspberry Pi 4 can achieve performance comparable to NVIDIA’s Jetson Nano at a fraction of the cost.

Method #3: Build from Source Packaging a code-base is a great way to learn more about it (especially when things do not go as planned). I highly recommend this option! Building TensorFlow has taught me more about the framework’s complex internals than any other ML exercise. The TensorFlow team recommends cross-compiling a Python wheel (a type of binary Python package) for Raspberry Pi [1]. For example, you can build a TensorFlow wheel for a 32-bit or 64-bit ARM processor on a computer running an x86 CPU instruction set. Before you get started, install the following prerequisites on your build machine: - Docker

- bazelisk (bazel version manager, like nvm for Node.js or rvm for Ruby)

Next, pull down TensorFlow’s source code from git. $ git clone https://github.com/tensorflow/tensorflow.git

$ cd tensorflow Check out the branch you want to build using git, then run the following to build a wheel for a Raspberry Pi 4 running a 32-bit OS and Python3.7: $ git checkout v2.4.0-rc2

$ tensorflow/tools/ci_build/ci_build.sh PI-PYTHON37 \

tensorflow/tools/ci_build/pi/build_raspberry_pi.sh For 64-bit support, add AARCH64 as an argument to the build_raspberry_pi.sh script. $ tensorflow/tools/ci_build/ci_build.sh PI-PYTHON37 \

tensorflow/tools/ci_build/pi/build_raspberry_pi.sh AARCH64 The official documentation can be found at tensorflow.org/install/source_rpi. Grab a snack and water while you wait for the build to finish! On my Threadripper 3990X (64 cores, 128 threads), compilation takes roughly 20 minutes. read the whole article with the other methods to achieve this at https://towardsdatascience.com/3-ways-to-install-tensorflow-2-on-raspberry-pi-fe1fa2da9104

|

Scooped by

nrip

|

Coding is becoming an increasingly vital skill. As more people learn how to code, neuroscientists are beginning to unlock the mystery behind what happens in people’s brains when they “think in code.” “Computer programming is not an old skill, so we don’t have an innate module in the brain that does the processing for us,” says Anna Ivanova, a graduate student at MIT’s Department of Brain and Cognitive Sciences. “That means we have to use some of our existing neural systems to process code.” Ivanova and her colleagues studied two brain systems that might be good candidates for processing code: The multiple demand system—which tends to be engaged in cognitively challenging tasks such as solving math problems or logical reasoning—and the language system. Despite the structural similarities between programming languages and natural languages, the researchers found that the brain does not engage the language system—it activates the multiple demand system. Mulling over a computer program is not like thinking in everyday language—but it's not pure logic either read this very interesting article on IEEE Spectrum https://spectrum.ieee.org/tech-talk/computing/software/what-does-your-brain-do-when-you-read-code

|

Scooped by

nrip

|

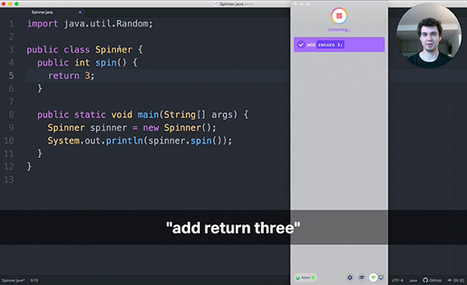

Increasingly, we’re interacting with our gadgets by talking to them. Old friends like Alexa and Siri are now being joined by automotive assistants like Apple CarPlay and Android Auto, and even apps sensitive to voice biometrics and commands. But what if the technology itself could be built using voice? That’s the premise behind voice coding, an approach to developing software using voice instead of a keyboard and mouse to write code. Through voice-coding platforms, programmers utter commands to manipulate code and create custom commands that cater to and automate their workflows. Voice coding isn’t as simple as it seems, with layers of complex technology behind it. From Voice to Code: Two of the leading programming-by-speech platforms today offer different approaches to the problem of reciting code to a computer. One, Serenade, acts a little like a digital assistant—allowing you to describe the commands you’re encoding, without mandating that you necessarily dictate each instruction word-for-word. Another, Talon, provides more granular control over each line, which also necessitates a slightly more detail-oriented grasp of each task being programmed into the machine. A simple example, below, is a step-by-step guide—in Serenade and in Talon—to generating the Python code needed to print the word “hello” onscreen. Voice coding is still in its infancy, and its potential to gain widespread adoption depends on how tied software engineers are to the traditional keyboard-and-mouse model of writing code. But voice coding opens up possibilities, maybe even a future where brain-computer interfaces directly transform what you’re thinking into code—or software itself. read the original unedited article at https://spectrum.ieee.org/computing/software/programming-by-voice-may-be-the-next-frontier-in-software-development

|

Scooped by

nrip

|

Software developers found themselves working very hard throughout 2020 as many businesses were forced to switch to entirely digital operations in a very short period of time. But according to a new report from the Consortium for Information and Software Security (CISQ), this haste came at a cost: something to the tune of $2.1 trillion, to be precise, and billions in waste CISQ's 2020 report, The Cost of Poor Software Quality in the US, looked at the financial impact of software projects that went awry or otherwise ended up leaving companies with a larger bill by creating additional headaches for them. According to the report, - unsuccessful IT projects alone cost US companies $260bn in 2020,

- while software problems in legacy systems cost businesses $520bn

- and software failures in operational systems left a dent of $1.56 trillion

Now, why poor quality software cost companies more in 2020 than in previous years As any Software Specialist and IT Architect will tell you, when it comes to software development, speed is a trade-off for quality and security. And, time was a luxury that many businesses couldn't afford in 2020, with the pandemic forcing offices to shut and prompting rapid digitization. As companies brought forward their digital transformation plans software development projects expanded rapidly. Also, the attitudes of most business leaders towards digital innovation are archaic, particularly when it comes to software. "Software quality lags behind other objectives in most organizations. That lack of primary attention to quality comes at a steep cost. While organizations can monetize the business value of speed, they rarely measure the offsetting cost of poor quality." It just takes one major outage or security breach to eliminate the value gained by speed to market. Disciplined software engineering matters when the potential losses are in trillions. As software is being developed and used the world over more than ever before, the cost of poor software quality is rising, and mostly still hidden. Organizations spend way too much time finding and fixing defects in new software and dealing with legacy software that cannot be easily evolved and modified. Read the original , unedited article at https://www.techrepublic.com/index.php/category/10250/4/index.php/article/developers-these-botched-software-rollouts-are-costing-businesses-billions/

|

Scooped by

nrip

|

With Microsoft's commitment to a continuously updated Windows 10 operating system and Microsoft 365 productivity suite, old features and applications are removed almost as fast as new features are introduced. The year 2021 will see a number of important Microsoft applications reach a state of deprecation and retirement. 2021 Microsoft feature retirement dates - TLS 1.0 and TLS 1.1—Jan. 11, 2021

- Skype for Business Online Connector—Feb. 15, 2021

- Microsoft Edge Legacy—March 9, 2021

- Microsoft 365 support for IE 11 – Aug. 17, 2021

- Visio Web Access—Sept. 30, 2021

|

|

Scooped by

nrip

|

Providing clear steps to reproduce an issue is a benefit for everyone When I’ve had to contact a company’s technical support through a form, I provide a ridiculously detailed description of what the issue is, when it happens, the specific troubleshooting that narrows it down, and (if it is allowed by the form) screenshots. I suspect the people on the other end aren’t used to that, because I know it is not what happens most of the time when people submit issues to me. What’s the benefit of an effective bug report? There are two sides to this, one is the efficiency of the interaction between the bug reporter and the (hopeful) bug fixer and the other is the actual likelihood that the issue will ever be fixed. Why do we need “repro steps”? The first part of fixing a problem is to make sure you understand it and can see it in action. If you can’t see the problem happen consistently, you can’t be sure you’ve fixed it. This is not a software development concept; it is true with nearly everything. If someone tells me the faucet is dripping, I’m going to want to see the drip happening before I try to fix it… then when I look and see it not dripping, I can feel confident that I resolved the problem. Is it possible to provide too much information? I’d rather have too much detail than too little, but I also feel that the bug reporter shouldn’t be expected to try to track down every possible variable or to try to fix the problem. Human Nature and Incomplete Issues I mentioned two sides to this, the efficiency of interaction and the likelihood an issue will be fixed. If you assume someone will ask for all the missing info, even if it takes a lot longer, then the only benefit to a complete bug report is efficiency. In reality though, developers are human, and when they see an incomplete issue where it could take many back-and-forth conversations to get the details they need, they will avoid it in favor of one that they can pick up and work on right now with no delay. Your issue, which could be more important than anything else in the queue, could end up being ignored because it is unclear. A detailed bug report, with clear repro steps, is a win for everyone. read this entire unedited super post at https://www.duncanmackenzie.net/blog/creating-an-effective-bug-report/

|

Scooped by

nrip

|

ONNX is an open format built to represent machine learning models. ONNX defines a common set of operators - the building blocks of machine learning and deep learning models - and a common file format to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers. It was introduced in September 2017 by Microsoft and Facebook. ONNX breaks the dependence between frameworks and hardware architectures. It has very quickly emerged as the default standard for portability and interoperability between deep learning frameworks. Before ONNX, data scientists found it difficult to choose from a range of AI frameworks available. Developers may prefer a certain framework at the outset of the project, during the research and development stage, but may require a completely different set of features for production. Thus organizations are forced to resort to creative and often cumbersome workarounds, including translating models by hand. ONNX standard aims to bridge the gap and enable AI developers to switch between frameworks based on the project’s current stage. Currently, the models supported by ONNX are Caffe, Caffe2, Microsoft Cognitive toolkit, MXNET, PyTorch. ONNX also offers connectors for other standard libraries and frameworks. Two use cases where ONNX has been successfully adopted include: - TensorRT: NVIDIA’s platform for high performance deep learning inference. It utilises ONNX to support a wide range of deep learning frameworks.

- Qualcomm Snapdragon NPE: The Qualcomm neural processing engine (NPE) SDK adds support for neural network evaluation to mobile devices. While NPE directly supports only Caffe, Caffe 2 and TensorFlow frameworks, ONNX format helps in indirectly supporting a wider range of frameworks.

The ONNX standard helps by allowing the model to be trained in the preferred framework and then run it anywhere on the cloud. Models from frameworks, including TensorFlow, PyTorch, Keras, MATLAB, SparkML can be exported and converted to standard ONNX format. Once the model is in the ONNX format, it can run on different platforms and devices. ONNX Runtime is the inference engine for deploying ONNX models to production. The features include: - It is written in C++ and has C, Python, C#, and Java APIs to be used in various environments.

- It can be used on both cloud and edge and works equally well on Linux, Windows, and Mac.

- ONNX Runtime supports DNN and traditional machine learning. It can integrate with accelerators on different hardware platforms such as NVIDIA GPUs, Intel processors, and DirectML on Windows.

- ONNX Runtime offers extensive production-grade optimisation, testing, and other improvements

access the original unedited post at https://analyticsindiamag.com/onnx-standard-and-its-significance-for-data-scientists/ Access the ONNX website at https://onnx.ai/ Start using ONNX -> Access the Github repo at https://github.com/onnx/onnx

|

Scooped by

nrip

|

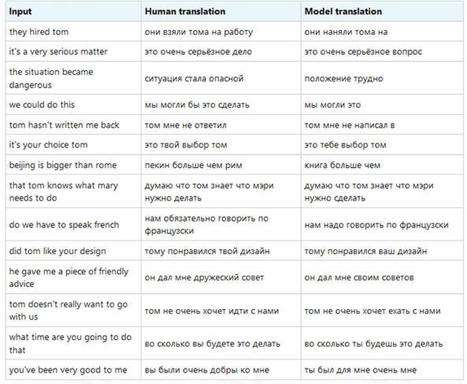

In the previous article, we built a deep learning-based model for automatic translation from English to Russian. In this article, we’ll train and test this model. Here we'll create a Keras tokenizer that will build an internal vocabulary out of the words found in the parallel corpus, use a Jupyter notebook to train and test our model, and try running our model with self-attention enabled.

|

Scooped by

nrip

|

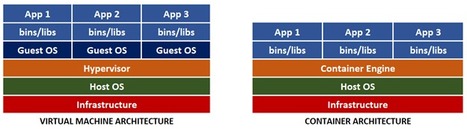

This article provides experienced developers with a comprehensive look at containerization: where it came from, how it works, and a look at the typical tools you'd use to build and deployed containerized cloud native apps. Here we: look at a brief history of how containerization started, explain what a modern container is, talk about how to build a container and copy an application you've written into it, explain why you'd want to push your container to a registry and how you can deploy straight from registries into production, and discuss deployment. Rise of the Modern Container Containerization took the IT industry by storm with the popularization of Docker. Containers were similar to VMs, but without the guest operating system (OS), leaving a much simpler package. The "works on my machine" excuses from developers are no longer an issue, as the application and its dependencies are all self-contained and shipped into the same unit called a container image. Images are ready-to-deploy copies of apps, while container instances created from those images usually run in a cloud platform such as Azure. The new architecture lacks the hypervisor since it is no longer needed. However, we still need to manage the new container, so the container engine concept was introduced. Containers are immutable, meaning that you can’t change a container image during its lifetime: you can't apply updates, patches, or configuration changes. If you must update your application, you should build a new image (which is essentially a changeset atop an existing container image) and redeploy it. Immutability makes container deployment easy and safe and ensures that deployed applications always work as expected, no matter where. Compared to the virtual machine, the new container is extremely lightweight and portable. Also, containers boot much faster. Due to their small size, containers help maximize use of the host OS and its resources. You can run Linux and Windows programs in Docker containers. The Docker platform runs natively on Linux and Windows, and Docker’s tools enable developers to build and run containers on Linux, Windows, and macOS. You can't use a container to run apps living on the local filesystem. However, you can access files outside a Docker container using bind mounts and volumes. They are similar, but bind mounts can point to any directory on the host computer and aren't managed by Docker directly. A Docker container accesses physical host hardware, like a GPU, on Linux but not on Windows. This is so because Docker on Linux runs on the kernel directly, while Docker on Windows works in a virtual machine, as Windows doesn't have a Linux kernel to directly communicate with. read this entire introduction to containers at Code project https://www.codeproject.com/Articles/5298350/An-Introduction-to-Containerization

|

Scooped by

nrip

|

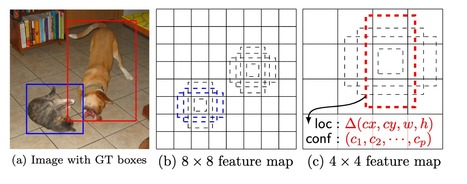

This paper introduces SSD, a fast single-shot object detector for multiple categories The SSD approach is based on a feed-forward convolutional network that produces a fixed-size collection of bounding boxes and scores for the presence of object class instances in those boxes, followed by a non-maximum suppression step to produce the final detections. SSD discretizes the output space of bounding boxes into a set of default boxes over different aspect ratios and scales per feature map location. At prediction time, the network generates scores for the presence of each object category in each default box and produces adjustments to the box to better match the object shape. Additionally, the network combines predictions from multiple feature maps with different resolutions to naturally handle objects of various sizes. The SSD model is simple relative to methods that require object proposals because it completely eliminates proposal generation and subsequent pixel or feature resampling stage and encapsulates all computation in a single network. This makes SSD easy to train and straightforward to integrate into systems that require a detection component. Experimental results on the PASCAL VOC, MS COCO, and ILSVRC datasets confirm that SSD has comparable accuracy to methods that utilize an additional object proposal step and is much faster, while providing a unified framework for both training and inference. Compared to other single stage methods, SSD has much better accuracy, even with a smaller input image size. Code is available at https://github.com/weiliu89/caffe/tree/ssd . read the paper at https://arxiv.org/pdf/1512.02325.pdf

|

Scooped by

nrip

|

Technical careers specialist Dice dove into job posting data to chart the salaries associated with popular programming languages, finding that Microsoft's TypeScript fares well in both accounts. The job data comes from Burning Glass, which provides real-time job market analytics. Dice combed through the data to determine which programming languages are most in-demand and how much they pay Those are two vital questions as technologists and developers everywhere decide which skills to learn next. See the attached photograph. TypeScript pays fairly well (fifth), but really shines in projected 10-year growth, where an astounding predicted rate of 60 percent dwarfs all other languages. Dice opined more about TypeScript specifically. "It's worth calling out TypeScript here. Technically, it's a superset of the ultra-popular and well-established JavaScript, which means that whatever you code in it is transpiled to JavaScript. That being said, many programming-language rankings (such as RedMonk) treat it as a full programming language. However you define it, it's clear that the language is on a strong growth trajectory, paired with a solid median salary. If you're looking for a new programming language to learn, keep an eye on it." read the whole report here: https://visualstudiomagazine.com/articles/2021/04/01/typescript-csharp.aspx For a quickstart to Typescript for tjose of you know who know javascript look here --> https://www.typescriptlang.org/docs/handbook/typescript-in-5-minutes.html

|

Scooped by

nrip

|

For some of us—isolates, happy in the dark—code is therapy, an escape and a path to hope in a troubled world A little over a year ago, as the Covid-19 lockdowns were beginning to fan out across the globe, most folks grasped for toilet paper and canned food. The thing I reached for: a search function. Reductively, programming consists of little puzzles to be solved. Not just inert jigsaws on living room tables, but puzzles that breathe with an uncanny life force. Puzzles that make things happen, that get things done, that automate tedium or allow for the publishing of words across the world. Break the problem into pieces. Put them into a to-do app (I use and love Things). This is how a creative universe is made. Each day, I’d brush aside the general collapse of society that seemed to be happening outside of the frame of my life, and dive into search work, picking off a to-do. Covid was large; my to-do list was reasonable. The real joy of this project wasn’t just in getting the search working but the refinement, the polish, the edge bits. Getting lost for hours in a world of my own construction. Even though I couldn’t control the looming pandemic, I could control this tiny cluster of bits. The whole process was an escape, but an escape with forward momentum. Getting the keyboard navigation styled just right, shifting the moment the search payload was delivered, finding a balance of index size and search usefulness. And most important, keeping it all light, delightfully light. And then writing it up, making it a tiny “gist” on GitHub, sharing with the community. It’s like an alley-oop to others: Go ahead, now you use it on your website. Super fast, keyboard-optimized, client side Hugo search. It's not perfect, but it’s darn good enough. read the original story at https://www.wired.com/story/healing-power-javascript-code-programming/

|

Scooped by

nrip

|

If we confuse shipping features for building a product, we will eventually see all forward progress cease. If we are continually adding new features, the overall complexity of the software will steadily increase, and the surface area that we need to maintain will grow to an unsustainable state. I’m not suggesting that adding new features is bad, but we need to add them with the long-term product in mind. We need to see a continuous focus on restructuring/improving the product as part of our job, not a tax that takes us away from adding new features. A common pattern in large software systems is that they grow in scope over time, leading to a state where they can no longer move quickly or keep up with the regular ongoing work needed. Eventually they die out or someone must rebuild them from the ground up. The business or the engineering team makes this decision because it seems like the easiest path forward. This doesn’t have to be the case, but to avoid it requires a mindset change. A focus on reducing complexity and sustainable growth. A preference for enhancing our core functionality instead of adding new capabilities. There are definite reasons for new features, they can be critical to the product, but we must build them with an awareness of their cost. We also need to be aware of what we are celebrating and creating incentives around. Is work done to improve maintainability, efficiency and existing functionality given equal weight to shipping something new and shiny? I suspect that if it were, we would have more stable and reliable software that could avoid the eventual demise of so many projects. read the complete version of this beautifully written, brilliant article at https://www.duncanmackenzie.net/blog/inevitable-cost-of-focusing-on-only-features/

|

Scooped by

nrip

|

Software is eating the world. But progress in software technology itself largely stalled around 1996. Here’s what we had then, in chronological order: LISP, Algol, Basic, APL, Unix, C, SQL, Oracle, Smalltalk, Windows, C++, LabView, HyperCard, Mathematica, Haskell, WWW, Python, Mosaic, Java, JavaScript, Ruby, Flash, Postgress. Since 1996 we’ve gotten: IntelliJ, Eclipse, ASP, Spring, Rails, Scala, AWS, Clojure, Heroku, V8, Go, Rust, React, Docker, Kubernetes, Wasm. All of these latter technologies are useful incremental improvements on top of the foundational technologies that came before. For example Rails was a great improvement in web application productivity, achieved by gluing together a bunch of existing technologies in a nicely structured way. But it didn’t invent anything fundamentally new. Likewise V8 made new applications possible by speeding up JavaScript, extending techniques invented in Smalltalk and Java. But Since 1996 almost everything has been cleverly repackaging and re-engineering prior inventions. Or adding leaky layers to partially paper over problems below. Nothing is obsoleted, and the teetering stack grows ever higher. Yes, there has been progress, but it is localized and tame. We seem to have lost the nerve to upset the status quo. more at https://alarmingdevelopment.org/?p=1475

|

Your new post is loading...

Your new post is loading...